Google has run a sting operation that it says proves Bing has been watching what people search for on Google, the sites they select from Google’s results, then uses that information to improve Bing’s own search listings. Bing doesn’t deny this.

As a result of the apparent monitoring, Bing’s relevancy is potentially improving (or getting worse) on the back of Google’s own work. Google likens it to the digital equivalent of Bing leaning over during an exam and copying off of Google’s test.

“I’ve spent my career in pursuit of a good search engine,” says Amit Singhal, a Google Fellow who oversees the search engine’s ranking algorithm. “I’ve got no problem with a competitor developing an innovative algorithm. But copying is not innovation, in my book.”

Bing doesn’t deny Google’s claim. Indeed, the statement that Stefan Weitz, director of Microsoft’s Bing search engine, emailed me yesterday as I worked on this article seems to confirm the allegation:

For example, consider a search for torsoraphy, which causes Google to return this:

In the example above, Google’s searched for the correct spelling — tarsorrhaphy — even though torsoraphy was entered. Notice the top listing for the corrected spelling is a page about the medical procedure at Wikipedia.

Over at Bing, the misspelling is NOT corrected — but somehow, Bing manages to list the same Wikipedia page at the top of its results as Google does for its corrected spelling results:

Got it? Despite the word being misspelled — and the misspelling not being corrected — Bing still manages to get the right page from Wikipedia at the top of its results, one of four total pages it finds from across the web. How did it do that?

It’s a point of pride to Google that it believes it has the best spelling correction system of any search engine. Google even claims that it can even correct misspellings that have never been searched on before. Engineers on the spelling correction team closely watch to see if they’re besting competitors on unusual terms.

So when misspellings on Bing for unusual words — such as above — started generating the same results as with Google, red flags went up among the engineers.

By no means did Bing have exactly the same search results as Google. There were plenty of queries where the listings had major differences. However, the increases were indicative that Bing had made some change to its search algorithm which was causing its results to be more Google-like.

Now Google began to strongly suspect that Bing might be somehow copying its results, in particular by watching what people were searching for at Google. There didn’t seem to be any other way it could be coming up with such similar matches to Google, especially in cases where spelling corrections were happening.

Google thought Microsoft’s Internet Explorer browser was part of the equation. Somehow, IE users might have been sending back data of what they were doing on Google to Bing. In particular, Google told me it suspected either the Suggested Sites feature in IE or the Bing toolbar might be doing this.

To verify its suspicions, Google set up a sting operation. For the first time in its history, Google crafted one-time code that would allow it to manually rank a page for a certain term (code that will soon be removed, as described further below). It then created about 100 of what it calls “synthetic” searches, queries that few people, if anyone, would ever enter into Google.

To verify its suspicions, Google set up a sting operation. For the first time in its history, Google crafted one-time code that would allow it to manually rank a page for a certain term (code that will soon be removed, as described further below). It then created about 100 of what it calls “synthetic” searches, queries that few people, if anyone, would ever enter into Google.

These searches returned no matches on Google or Bing — or a tiny number of poor quality matches, in a few cases — before the experiment went live. With the code enabled, Google placed a honeypot page to show up at the top of each synthetic search.

The only reason these pages appeared on Google was because Google forced them to be there. There was nothing that made them naturally relevant for these searches. If they started to appeared at Bing after Google, that would mean that Bing took Google’s bait and copied its results.

This all happened in December. When the experiment was ready, about 20 Google engineers were told to run the test queries from laptops at home, using Internet Explorer, with Suggested Sites and the Bing Toolbar both enabled. They were also told to click on the top results. They started on December 17. By December 31, some of the results started appearing on Bing.

Here’s an example, which is still working as I write this, hiybbprqag at Google:

and the same exact match at Bing:

Here’s another, for mbzrxpgjys at Google:

and the same match at Bing:

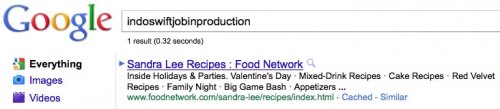

Here’s one more, this time for indoswiftjobinproduction, at Google:

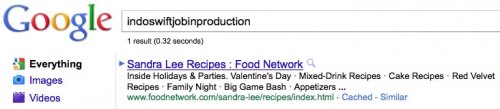

And at Bing:

To be clear, before the test began, these queries found either nothing or a few poor quality results on Google or Bing. Then Google made a manual change, so that a specific page would appear at the top of these searches, even though the site had nothing to do with the search. Two weeks after that, some of these pages began to appear on Bing for these searches.

It strongly suggests that Bing was copying Google’s results, by watching what some people do at Google via Internet Explorer.

As I wrote earlier, Bing is far from identical to Google for many queries. This suggests that even if Bing is using search activity at Google to improve its results, that’s only one of many signals being considered.

Search engines all have ranking algorithms that use various signals to determine which pages should come first. What words are used on the page? How many links point at that page? How important are those links estimated to be? What words appear in the links pointing at the page? How important is the web site estimated to be? These are just some of the signals that both Bing and Google use.

Google’s test suggests that when Bing has many of the traditional signals, as is likely for popular search topics, it relies mostly on those. But in cases where Bing has fewer trustworthy signals, such as “long tail” searches that bring up fewer matches, then Bing might lean more on how Google ranks pages for those searches.

In cases where there are no signals other than how Google ranks things, such as with the synthetic queries that Google tested, then the Google “signal” may come through much more.

Internet Explorer makes clear (to those who bother to read its privacy policy) that by default, it’s going to capture some of your browsing data, unless you switch certain features off. It may also gather more data if you enable some features.

Suggested Sites is one of likely ways that Bing may have been gathering information about what’s happening on Google. This is a feature (shown to the right) that suggests other sites to visit, based on the site you’re viewing.

Suggested Sites is one of likely ways that Bing may have been gathering information about what’s happening on Google. This is a feature (shown to the right) that suggests other sites to visit, based on the site you’re viewing.

Microsoft does disclose that Suggested Sites collects information about sites you visit. From the privacy policy:

It makes sense that the Suggested Sites feature needs to report the URL you’re viewing back to Microsoft. Otherwise, it doesn’t know what page to show you suggestions for. The Google Toolbar does the same thing, tells Google what page you’re viewing, if you have the PageRank feature enabled.

But to monitor what you’re clicking on in search results? There’s no reason I can see for Suggested Sites to do that — if it indeed does. But even if it does log clicks, Microsoft may feel that this is “standard computer information” that the policy allows to be collected.

The install page highlights some of what will be collected and how it will be used:

It’s hard to argue that gathering information about what people search for at Google isn’t covered. Technically, there’s nothing misleading — even if Bing, for obvious reasons, isn’t making it explicit that to improve its search results, it might look at what Bing Bar users search for at Google and click on there.

“Absolutely not. The PageRank feature sends back URLs, but we’ve never used those URLs or data to put any results on Google’s results page. We do not do that, and we will not do that,” said Singhal.

Actually, Google has previously said that the toolbar does play a role in ranking. Google uses toolbar data in part to measure site speed — and site speed was a ranking signal that Google began using last year.

Instead, Singhal seems to be saying that the URLs that the toolbar sees are not used for finding pages to index (something Google’s long denied) or to somehow find new results to add to the search results.

As for Chrome, Google says the same thing — there’s no information flowing back that’s used to improve search rankings. In fact, Google stressed that the only information that flows back at all from Chrome is what people are searching for from within the browser, if they are using Google as their search engine.

On the one hand, you could say it’s incredibly clever. Why not mine what people are selecting as the top results on Google as a signal? It’s kind of smart. Indeed, I’m pretty sure we’ve had various small services in the past that have offered for people to bookmark their top choices from various search engines.

Google doesn’t see it as clever.

“It’s cheating to me because we work incredibly hard and have done so for years but they just get there based on our hard work,” said Singhal. “I don’t know how else to call it but plain and simple cheating. Another analogy is that it’s like running a marathon and carrying someone else on your back, who jumps off just before the finish line.”

In particular, Google seems most concerned that the impact of mining user data on its site potentially pays off the most for Bing on long-tail searches, unique searches where Google feels it works especially hard to distinguish itself.

Google also stressed to me that the code only worked for this limited set of synthetic queries — and that it had an additional failsafe. Should any of the test queries suddenly become even mildly popular for some reason, the honeypot page for that query would no longer show.

This means if you test the queries above, you may no longer see the same results at Google. However, I did see all these results myself before writing this, along with some additional ones that I’ve not done screenshots for. So did several of my other editors yesterday.

Recall that Google got its experiment confirmed on December 31. The next day — New Year’s Day — TechCrunch ran an article called Why We Desperately Need a New (and Better) Google from guest author Vivek Wadhwa, praising Blekko for having better date search than Google and painting a generally dismal picture of Google’s relevancy overall.

I doubt Google had any idea that Wadhwa’s article was coming, and I’m virtually certain Wadhwa had no idea about Google’s testing of Bing. But his article kicked off a wave of “Google’s results suck” posts.

Trouble In the House of Google from Jeff Atwood of Coding Horror appeared on January 3; Google’s decreasingly useful, spam-filled web search from Marco Arment of Instapaper came out on January 5. Multiple people mistakenly reported Paul Kedrosky’s December 2009 article about struggling to research a dishwasher as also being part of the current wave. It wasn’t, but on January 11, Kedrosky weighed in with fresh thoughts in Curation is the New Search is the New Curation.

The wave kept going. It’s still going. Along the way, Search Engine Land itself had several pieces, with Conrad Saam’s column on January 12, Google vs. Bing: The Fallacy Of The Superior Search Engine, gaining a lot of attention. In it, he did a short survey of 20 searches and concluded that Google and Bing weren’t that different.

The day after that, Bing contacted me. They were hosting an event on February 1 to talk about the state of search and wanted to make sure I had the date saved, in case I wanted to come up for it. I said I’d make it. I later learned that the event was being organized by Wadhwa, author of that TechCrunch article.

A change on Google’s end shifted my meeting to January 28, last Friday. As is typical when I visit Google, I had a number of different meetings to talk about various products and issues. My last meeting of the day was with Singhal and Cutts — where they shared everything I’ve described above, explaining this is one reason why Google and Bing might be looking so similar, as our columnist found.

Yes, they wanted the news to be out before the Bing event happened — an event that Google is participating in. They felt it was important for the overall discussion about search quality. But the timing of the news is being so close to the event is down to when I could make the trip to Google. If I’d have been able to go in earlier, then I might have been writing this a week ago.

Meanwhile, you have this odd timing of Wadhwa’s TechCrunch article and the Bing event he’s organizing. I have no idea if Wadhwa was booked to do the Bing event before his article went out or if he was contracted to do this after, perhaps because Bing saw the debate over Google’s quality kick off and decided it was good to ride it. I’ll try to find out.

In the end, for whatever reasons, the findings of Google’s experiment and Bing’s event are colliding, right in the middle of a renewed focus of attention on search quality. Was this all planned to happen? Gamesmanship by both Google and Bing? Just odd coincidences? I go with the coincidences, myself.

[Postscript: Wadhwa tweeted the event timing was a coincidence. And let me add, my assumption really was that this is all coincidence. I'm pointing it out mainly because there are just so many crazy things all happening at the same time, which some people will inevitably try to connect. Make no mistake. Both Google and Bing play the PR game. But I think what's happening right now is that there's a perfect storm of various developments all coming together at the same time. And if that storm gets people focused on demanding better search quality, I'm happy].

I’ve long written that every search engine has its own “search voice,” a unique set of search results it provides, based on its collection of documents and its own particular method of ranking those.

I like that search engines have each had their own voices. One of the worst things about Yahoo changing over to Bing’s results last year was that in the US (and in many countries around the world), we were suddenly down to only two search voices: Google’s and Bing’s.

For 15 years, I’ve covered search. In all that time, we’ve never had so few search voices as we do now. At one point, we had more than 10. That’s one thing I love about the launch of Blekko. It gave us a fresh, new search voice.

When Bing launched in 2009, the joke was that Bing stood for either “Because It’s Not Google” or “But It’s Not Google.” Mining Google’s searches makes me wonder if the joke should change to “Bing Is Now Google.”

I think Bing should develop its own search voice without using Google’s as a tuning fork. That just doesn’t ring true to me. But I look forward to talking with Bing more about the issue and hopefully getting more clarity from them about what they may be doing and their views on it.

Opening image from Real Genius. They were taking a test. There’s no suggestion that Google is cool Chris Knight or that Bing is dorky Kent (or vice versa). It’s a great movie.

As a result of the apparent monitoring, Bing’s relevancy is potentially improving (or getting worse) on the back of Google’s own work. Google likens it to the digital equivalent of Bing leaning over during an exam and copying off of Google’s test.

“I’ve spent my career in pursuit of a good search engine,” says Amit Singhal, a Google Fellow who oversees the search engine’s ranking algorithm. “I’ve got no problem with a competitor developing an innovative algorithm. But copying is not innovation, in my book.”

Bing doesn’t deny Google’s claim. Indeed, the statement that Stefan Weitz, director of Microsoft’s Bing search engine, emailed me yesterday as I worked on this article seems to confirm the allegation:

As you might imagine, we use multiple signals and approaches when we think about ranking, but like the rest of the players in this industry, we’re not going to go deep and detailed in how we do it. Clearly, the overarching goal is to do a better job determining the intent of the search, so we can guess at the best and most relevant answer to a given query.

Opt-in programs like the [Bing] toolbar help us with clickstream data, one of many input signals we and other search engines use to help rank sites. This “Google experiment” seems like a hack to confuse and manipulate some of these signals.Later today, I’ll likely have a more detailed response from Bing. Microsoft wanted to talk further after a search event it is hosting today. More about that event, and how I came to be reporting on Google’s findings just before it began, comes at the end of this story. But first, here’s how Google’s investigation unfolded.

Postscript: Bing: Why Google’s Wrong In Its Accusations is the follow-up story from talking with Bing. Please be sure to read it after this. You’ll also find another link to it at the end of this article.

Hey, Does This Seem Odd To You?

Around late May of last year, Google told me it began noticing that Bing seemed to be doing exceptionally well at returning the same sites that Google would list, when someone would enter unusual misspellings.For example, consider a search for torsoraphy, which causes Google to return this:

In the example above, Google’s searched for the correct spelling — tarsorrhaphy — even though torsoraphy was entered. Notice the top listing for the corrected spelling is a page about the medical procedure at Wikipedia.

Over at Bing, the misspelling is NOT corrected — but somehow, Bing manages to list the same Wikipedia page at the top of its results as Google does for its corrected spelling results:

Got it? Despite the word being misspelled — and the misspelling not being corrected — Bing still manages to get the right page from Wikipedia at the top of its results, one of four total pages it finds from across the web. How did it do that?

It’s a point of pride to Google that it believes it has the best spelling correction system of any search engine. Google even claims that it can even correct misspellings that have never been searched on before. Engineers on the spelling correction team closely watch to see if they’re besting competitors on unusual terms.

So when misspellings on Bing for unusual words — such as above — started generating the same results as with Google, red flags went up among the engineers.

Google: Is Bing Copying Us?

More red flags went up in October 2010, when Google told me it noticed a marked rise in two key competitive metrics. Across a wide range of searches, Bing was showing a much greater overlap with Google’s top 10 results than in preceding months. In addition, there was an increase in the percentage of times both Google and Bing listed exactly the same page in the number one spot.By no means did Bing have exactly the same search results as Google. There were plenty of queries where the listings had major differences. However, the increases were indicative that Bing had made some change to its search algorithm which was causing its results to be more Google-like.

Now Google began to strongly suspect that Bing might be somehow copying its results, in particular by watching what people were searching for at Google. There didn’t seem to be any other way it could be coming up with such similar matches to Google, especially in cases where spelling corrections were happening.

Google thought Microsoft’s Internet Explorer browser was part of the equation. Somehow, IE users might have been sending back data of what they were doing on Google to Bing. In particular, Google told me it suspected either the Suggested Sites feature in IE or the Bing toolbar might be doing this.

To Sting A Bing

To verify its suspicions, Google set up a sting operation. For the first time in its history, Google crafted one-time code that would allow it to manually rank a page for a certain term (code that will soon be removed, as described further below). It then created about 100 of what it calls “synthetic” searches, queries that few people, if anyone, would ever enter into Google.

To verify its suspicions, Google set up a sting operation. For the first time in its history, Google crafted one-time code that would allow it to manually rank a page for a certain term (code that will soon be removed, as described further below). It then created about 100 of what it calls “synthetic” searches, queries that few people, if anyone, would ever enter into Google.These searches returned no matches on Google or Bing — or a tiny number of poor quality matches, in a few cases — before the experiment went live. With the code enabled, Google placed a honeypot page to show up at the top of each synthetic search.

The only reason these pages appeared on Google was because Google forced them to be there. There was nothing that made them naturally relevant for these searches. If they started to appeared at Bing after Google, that would mean that Bing took Google’s bait and copied its results.

This all happened in December. When the experiment was ready, about 20 Google engineers were told to run the test queries from laptops at home, using Internet Explorer, with Suggested Sites and the Bing Toolbar both enabled. They were also told to click on the top results. They started on December 17. By December 31, some of the results started appearing on Bing.

Here’s an example, which is still working as I write this, hiybbprqag at Google:

and the same exact match at Bing:

Here’s another, for mbzrxpgjys at Google:

and the same match at Bing:

Here’s one more, this time for indoswiftjobinproduction, at Google:

And at Bing:

To be clear, before the test began, these queries found either nothing or a few poor quality results on Google or Bing. Then Google made a manual change, so that a specific page would appear at the top of these searches, even though the site had nothing to do with the search. Two weeks after that, some of these pages began to appear on Bing for these searches.

It strongly suggests that Bing was copying Google’s results, by watching what some people do at Google via Internet Explorer.

The Google Ranking Signal

Only a small number of the test searches produced this result, about 7 to 9 (depending on when exactly Google checked) out of the 100. Google says it doesn’t know why they didn’t all work, but even having a few appear was enough to convince the company that Bing was copying its results.As I wrote earlier, Bing is far from identical to Google for many queries. This suggests that even if Bing is using search activity at Google to improve its results, that’s only one of many signals being considered.

Search engines all have ranking algorithms that use various signals to determine which pages should come first. What words are used on the page? How many links point at that page? How important are those links estimated to be? What words appear in the links pointing at the page? How important is the web site estimated to be? These are just some of the signals that both Bing and Google use.

Google’s test suggests that when Bing has many of the traditional signals, as is likely for popular search topics, it relies mostly on those. But in cases where Bing has fewer trustworthy signals, such as “long tail” searches that bring up fewer matches, then Bing might lean more on how Google ranks pages for those searches.

In cases where there are no signals other than how Google ranks things, such as with the synthetic queries that Google tested, then the Google “signal” may come through much more.

Do Users Know (Or Care)?

Do Internet Explorer users know that they might be helping Bing in the way Google alleges? Technically, yes — as best I can tell. Explicitly, absolutely not.Internet Explorer makes clear (to those who bother to read its privacy policy) that by default, it’s going to capture some of your browsing data, unless you switch certain features off. It may also gather more data if you enable some features.

Suggested Sites

Suggested Sites is one of likely ways that Bing may have been gathering information about what’s happening on Google. This is a feature (shown to the right) that suggests other sites to visit, based on the site you’re viewing.

Suggested Sites is one of likely ways that Bing may have been gathering information about what’s happening on Google. This is a feature (shown to the right) that suggests other sites to visit, based on the site you’re viewing.Microsoft does disclose that Suggested Sites collects information about sites you visit. From the privacy policy:

When Suggested Sites is turned on, the addresses of websites you visit are sent to Microsoft, together with standard computer information.

To help protect your privacy, the information is encrypted when sent to Microsoft. Information associated with the web address, such as search terms or data you entered in forms might be included.

For example, if you visited the Microsoft.com search website at http://search.microsoft.com and entered “Seattle” as the search term, the full address http://search.microsoft.com/results.aspx?q=Seattle&qsc0=0&FORM=QBMH1&mkt=en-US will be sent.I’ve bolded the key parts. What you’re searching on gets sent to Microsoft. Even though the example provided involves a search on Microsoft.com, the policy doesn’t prevent any search — including those at Google — from being sent back.

It makes sense that the Suggested Sites feature needs to report the URL you’re viewing back to Microsoft. Otherwise, it doesn’t know what page to show you suggestions for. The Google Toolbar does the same thing, tells Google what page you’re viewing, if you have the PageRank feature enabled.

But to monitor what you’re clicking on in search results? There’s no reason I can see for Suggested Sites to do that — if it indeed does. But even if it does log clicks, Microsoft may feel that this is “standard computer information” that the policy allows to be collected.

The Bing Bar

There’s also the Bing Bar — a Bing toolbar — that Microsoft encourages people to install separately from Internet Explorer (IE may come with it pre-installed through some partner deals. When you install the toolbar, by default it is set to collect information to “improve” your experience, as you can see:

The install page highlights some of what will be collected and how it will be used:

“improve your online experience with personalized content by allowing us to collect additional information about your system configuration, the searches you do, websites you visit, and how you use our software. We will also use this information to help improve our products and services.”Again, I’ve bolded the key parts. The Learn More page about the data the Bing Bar collects ironically says less than what’s directly on the install page.

It’s hard to argue that gathering information about what people search for at Google isn’t covered. Technically, there’s nothing misleading — even if Bing, for obvious reasons, isn’t making it explicit that to improve its search results, it might look at what Bing Bar users search for at Google and click on there.

What About The Google Toolbar & Chrome?

Google has its own Google Toolbar, as well as its Chrome browser. So I asked Google. Does it do the same type of monitoring that it believes Bing does, to improve Google’s search results?“Absolutely not. The PageRank feature sends back URLs, but we’ve never used those URLs or data to put any results on Google’s results page. We do not do that, and we will not do that,” said Singhal.

Actually, Google has previously said that the toolbar does play a role in ranking. Google uses toolbar data in part to measure site speed — and site speed was a ranking signal that Google began using last year.

Instead, Singhal seems to be saying that the URLs that the toolbar sees are not used for finding pages to index (something Google’s long denied) or to somehow find new results to add to the search results.

As for Chrome, Google says the same thing — there’s no information flowing back that’s used to improve search rankings. In fact, Google stressed that the only information that flows back at all from Chrome is what people are searching for from within the browser, if they are using Google as their search engine.

Postscript: See Google On Toolbar: We Don’t Use Bing’s Searches

Is It Illegal?

Suffice to say, Google’s pretty unhappy with the whole situation, which does raise a number of issues. For one, is what Bing seems to be doing illegal? Singhal was “hesitant” to say that since Google technically hasn’t lost anything. It still has its own results, even if it feels Bing is mimicking them.Is it Cheating?

If it’s not illegal, is what Bing may be doing unfair, somehow cheating at the search game?On the one hand, you could say it’s incredibly clever. Why not mine what people are selecting as the top results on Google as a signal? It’s kind of smart. Indeed, I’m pretty sure we’ve had various small services in the past that have offered for people to bookmark their top choices from various search engines.

Google doesn’t see it as clever.

“It’s cheating to me because we work incredibly hard and have done so for years but they just get there based on our hard work,” said Singhal. “I don’t know how else to call it but plain and simple cheating. Another analogy is that it’s like running a marathon and carrying someone else on your back, who jumps off just before the finish line.”

In particular, Google seems most concerned that the impact of mining user data on its site potentially pays off the most for Bing on long-tail searches, unique searches where Google feels it works especially hard to distinguish itself.

Ending The Experiment

Now that Google’s test is done, it will be removing the one-time code it added to allow for the honeypot pages to be planted. Google has proudly claimed over the years that it had no such ability, as proof of letting its ranking algorithm make decisions. It has no plans to keep this new ability and wants to kill it, so things are back to “normal.”Google also stressed to me that the code only worked for this limited set of synthetic queries — and that it had an additional failsafe. Should any of the test queries suddenly become even mildly popular for some reason, the honeypot page for that query would no longer show.

This means if you test the queries above, you may no longer see the same results at Google. However, I did see all these results myself before writing this, along with some additional ones that I’ve not done screenshots for. So did several of my other editors yesterday.

Why Open Up Now?

What prompted Google to step forward now and talk with me about its experiment? A grand plan to spoil Bing’s big search event today? A clever way to distract from current discussions about its search quality? Just a coincidence of timing? In the end, whatever you believe about why Google is talking now doesn’t really matter. The bigger issue is whether you believe what Bing is doing is fair play or not. But here’s the strange backstory.Recall that Google got its experiment confirmed on December 31. The next day — New Year’s Day — TechCrunch ran an article called Why We Desperately Need a New (and Better) Google from guest author Vivek Wadhwa, praising Blekko for having better date search than Google and painting a generally dismal picture of Google’s relevancy overall.

I doubt Google had any idea that Wadhwa’s article was coming, and I’m virtually certain Wadhwa had no idea about Google’s testing of Bing. But his article kicked off a wave of “Google’s results suck” posts.

Trouble In the House of Google from Jeff Atwood of Coding Horror appeared on January 3; Google’s decreasingly useful, spam-filled web search from Marco Arment of Instapaper came out on January 5. Multiple people mistakenly reported Paul Kedrosky’s December 2009 article about struggling to research a dishwasher as also being part of the current wave. It wasn’t, but on January 11, Kedrosky weighed in with fresh thoughts in Curation is the New Search is the New Curation.

The wave kept going. It’s still going. Along the way, Search Engine Land itself had several pieces, with Conrad Saam’s column on January 12, Google vs. Bing: The Fallacy Of The Superior Search Engine, gaining a lot of attention. In it, he did a short survey of 20 searches and concluded that Google and Bing weren’t that different.

Time To Talk? Come To Our Event?

The day after that column appeared, I got a call from Google. Would I have time to come talk in person about something they wanted to show me, relating to relevancy? Sure. Checking my calendar, I said January 27 — a Thursday — would be a good time for me to fly up from where I work in Southern California to Google’s Mountain View campus.The day after that, Bing contacted me. They were hosting an event on February 1 to talk about the state of search and wanted to make sure I had the date saved, in case I wanted to come up for it. I said I’d make it. I later learned that the event was being organized by Wadhwa, author of that TechCrunch article.

A change on Google’s end shifted my meeting to January 28, last Friday. As is typical when I visit Google, I had a number of different meetings to talk about various products and issues. My last meeting of the day was with Singhal and Cutts — where they shared everything I’ve described above, explaining this is one reason why Google and Bing might be looking so similar, as our columnist found.

Yes, they wanted the news to be out before the Bing event happened — an event that Google is participating in. They felt it was important for the overall discussion about search quality. But the timing of the news is being so close to the event is down to when I could make the trip to Google. If I’d have been able to go in earlier, then I might have been writing this a week ago.

Meanwhile, you have this odd timing of Wadhwa’s TechCrunch article and the Bing event he’s organizing. I have no idea if Wadhwa was booked to do the Bing event before his article went out or if he was contracted to do this after, perhaps because Bing saw the debate over Google’s quality kick off and decided it was good to ride it. I’ll try to find out.

In the end, for whatever reasons, the findings of Google’s experiment and Bing’s event are colliding, right in the middle of a renewed focus of attention on search quality. Was this all planned to happen? Gamesmanship by both Google and Bing? Just odd coincidences? I go with the coincidences, myself.

[Postscript: Wadhwa tweeted the event timing was a coincidence. And let me add, my assumption really was that this is all coincidence. I'm pointing it out mainly because there are just so many crazy things all happening at the same time, which some people will inevitably try to connect. Make no mistake. Both Google and Bing play the PR game. But I think what's happening right now is that there's a perfect storm of various developments all coming together at the same time. And if that storm gets people focused on demanding better search quality, I'm happy].

The Search Voice

In the end, I’ve got some sympathy for Google’s view that Bing is doing something it shouldn’t.I’ve long written that every search engine has its own “search voice,” a unique set of search results it provides, based on its collection of documents and its own particular method of ranking those.

I like that search engines have each had their own voices. One of the worst things about Yahoo changing over to Bing’s results last year was that in the US (and in many countries around the world), we were suddenly down to only two search voices: Google’s and Bing’s.

For 15 years, I’ve covered search. In all that time, we’ve never had so few search voices as we do now. At one point, we had more than 10. That’s one thing I love about the launch of Blekko. It gave us a fresh, new search voice.

When Bing launched in 2009, the joke was that Bing stood for either “Because It’s Not Google” or “But It’s Not Google.” Mining Google’s searches makes me wonder if the joke should change to “Bing Is Now Google.”

I think Bing should develop its own search voice without using Google’s as a tuning fork. That just doesn’t ring true to me. But I look forward to talking with Bing more about the issue and hopefully getting more clarity from them about what they may be doing and their views on it.

Opening image from Real Genius. They were taking a test. There’s no suggestion that Google is cool Chris Knight or that Bing is dorky Kent (or vice versa). It’s a great movie.

One of the most overlooked aspects of SEO is images. Most websites have lots of images but few actually apply SEO techniques to them.

One of the most overlooked aspects of SEO is images. Most websites have lots of images but few actually apply SEO techniques to them.